01 | The Paradigm Shift

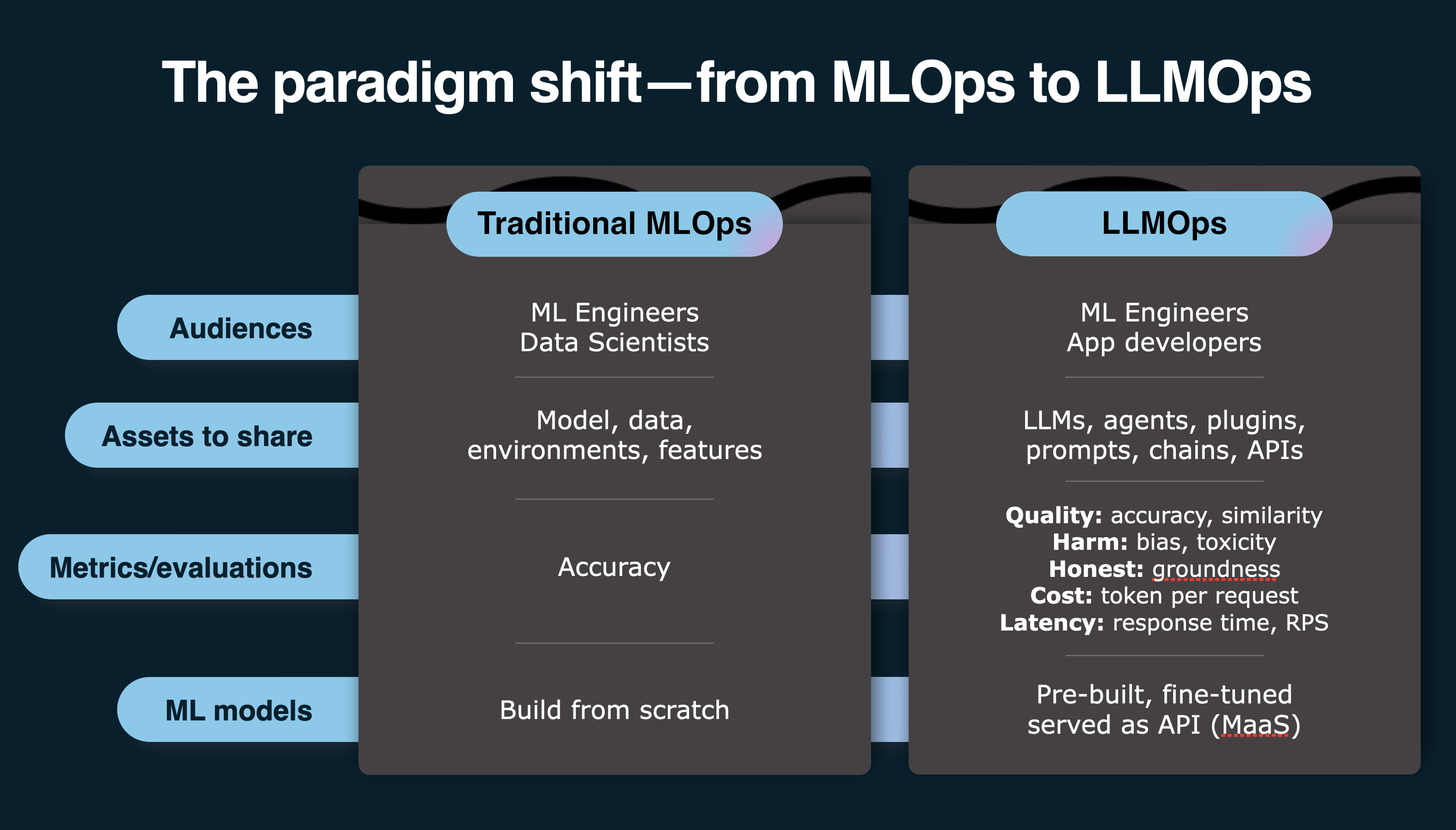

Streamlining the end-to-end development workflow for modern "AI apps" requires a paradigm shift from MLOps to LLMOps that acknowledges the common roots while being mindful of the growing differences. We can view this shift in terms of how it causes us to rethink three things (mindset, workflows, tools) to be more effective in developing generative AI applications.

Rethink Mindset

Traditional "AI apps" can be viewed as "ML apps". They took data inputs and used custom-trained models to return relevant predictions as output. Modern "AI apps" tend to refer to generative AI apps that take natural language inputs (prompts), use pre-trained large language models (LLM), and return original content to users as the response. This shift is reflected in many ways.

- The target audience is different ➡ App developers, not data scientists.

- The generated assets are different ➡ Emphasize integrations, not predictions.

- The evaluation metrics are different ➡ Focus on fairness, groundedness, token usage.

- The underlying ML models are different. Pre-trained "Models-as-a-Service" vs. build.

Rethink Workflow

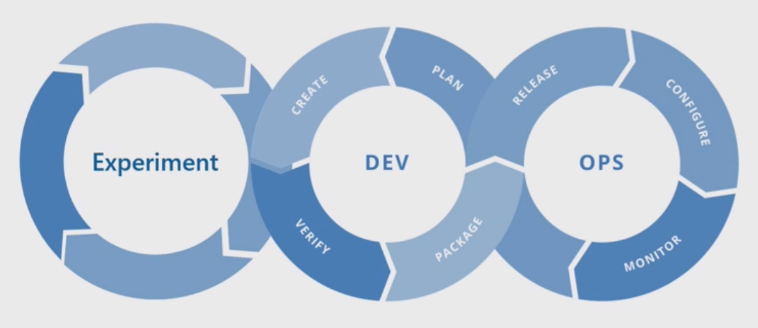

With MLOps, end-to-end application development involved a complex data science lifecycle. To "fit" into software development processes, this was mapped to a higher-level workflow visualized as shown below. The complex data science lifecycle steps (data preparation, model engineering & model evaluation) are now encapsulated into the experimentation phase.

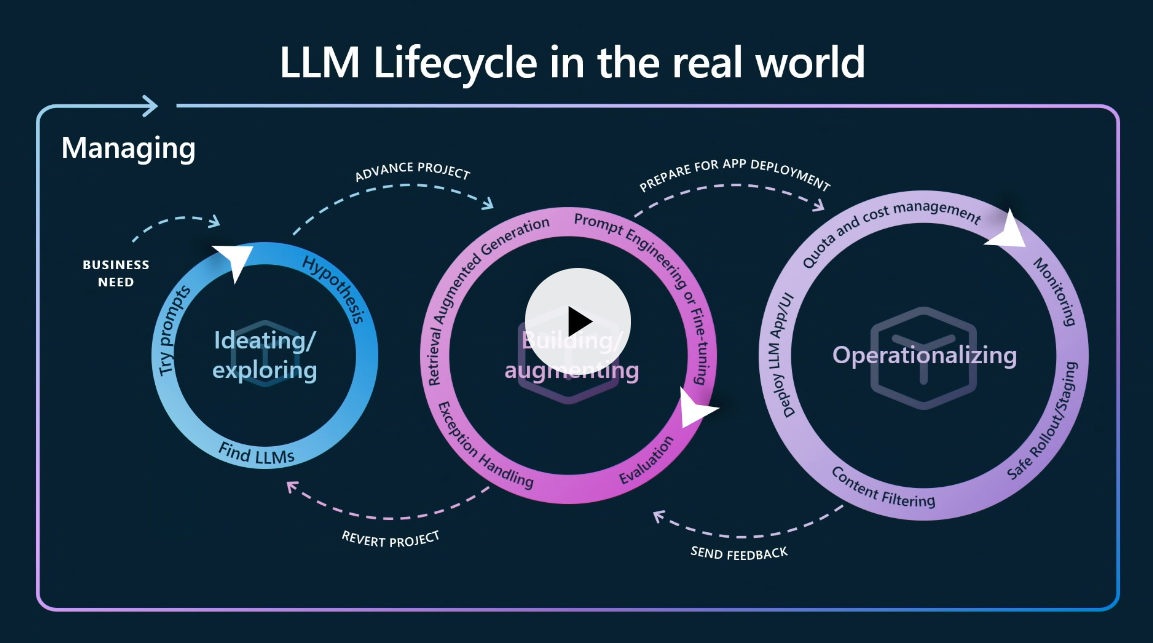

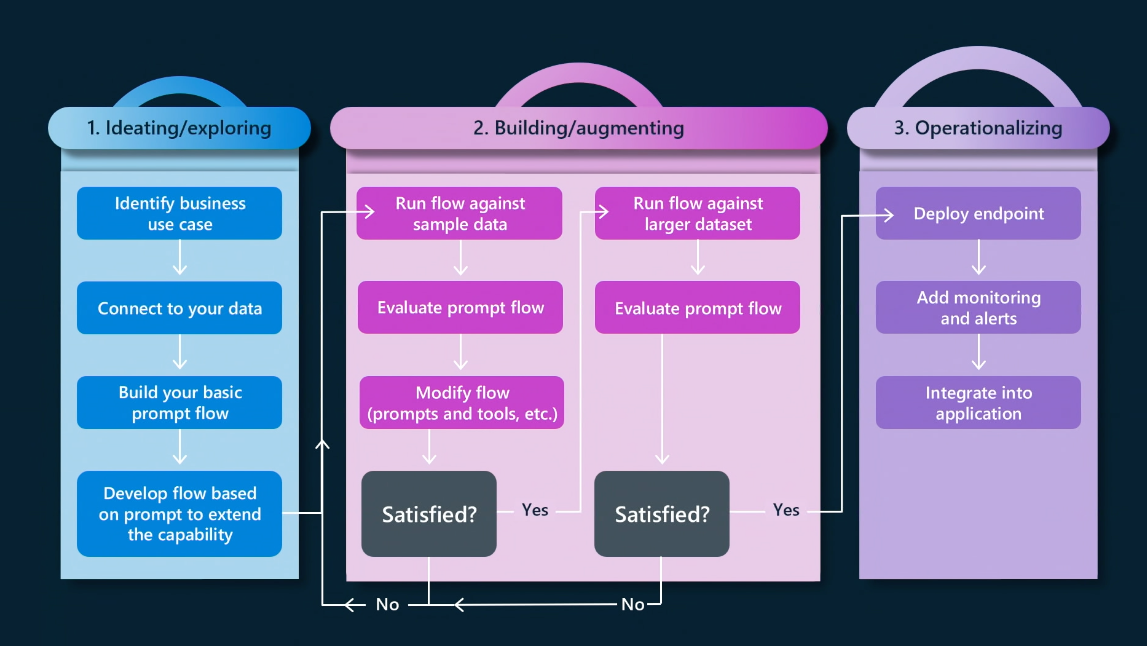

With LLMOps, those steps need to be rethought in the context of new requirements like using natural langauge inputs (prompts), new techniques for improving quality (RAG, Fine-Tuning), new metrics for evaluation (groundedness, coherence, fluency) and responsible AI (assessment). This leads us to a revised versio of the 3-phase application development lifecycle as shown:

We can unpack each phase to get a sense of individual steps in workflows that are now designed around prompt-based inputs, token-based pricing, and region-based availability of large language models and Azure AI services for provisioning.

Building LLM Apps: From Prompt Engineering to LLM Ops

In the accompanying workshop, we'll walk through the end-to-end development process for our RAG-based LLM App from prompt engineering (ideation, augmentation) to LLM Ops (operationalization). We hope that helps make some of these abstract concepts feel more concrete when viewed in action.

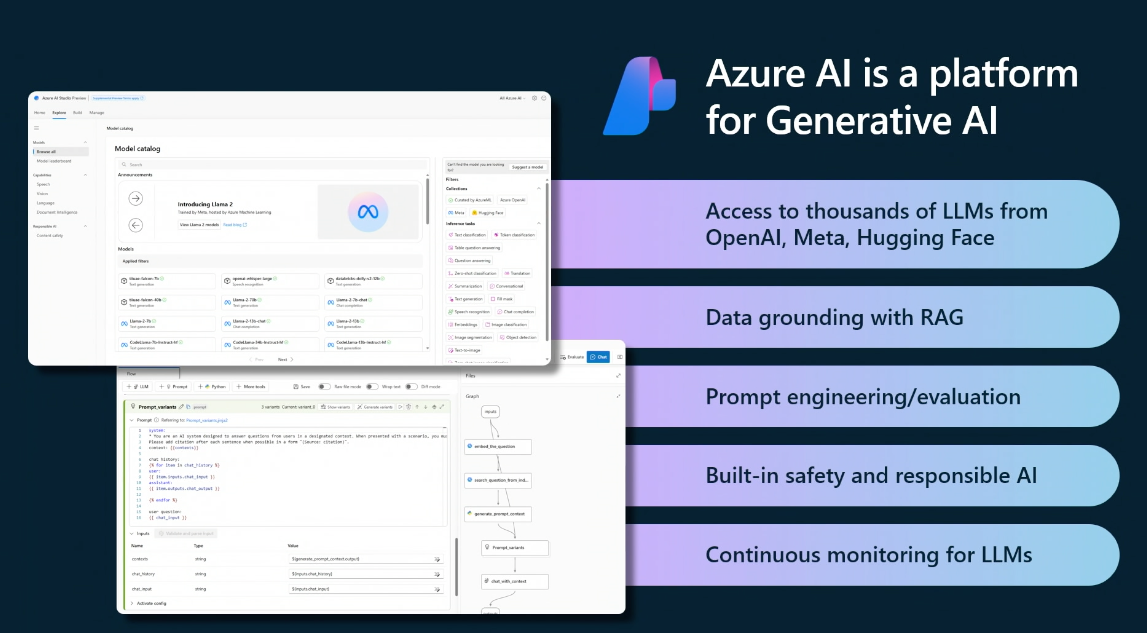

Rethink Tools

We can immediately see how this new application development lifecycle requires corresponding innovation in tooling to streamline the end-to-end development process from ideation to operationalization. The Azure AI platform has been retooled with exactly these requirements in mind. It is centered around Azure AI Studio, a unified web portal that:

- lets you "Explore" models, capabilities, samples & responsible AI tools

- gives you single pane of glass visibility to "Manage" Azure AI resources

- provides UI-based development flows to "Build" your Azure AI projects

- has Azure AI SDK and Azure AI CLI options for "Code-first" development

The Azure AI platform is enhanced by other developer tools and resources including PromptFlow, Visual Studio Code Extensions and Responsible AI guidance with built-in support for content-filtering. We'll cover some of these in the Concepts and Tooling sections of this guide.

Using The Azure AI Platform

The abstract workflow will feel more concrete when we apply the concepts to a real use case. In the Workshop section, you'll get hands-on experience with these tools to give you a sense of their roles in streamlining your end-to-end developer experience.

- Azure AI Studio: Build & Manage Azure AI project and resources.

- Prompt Flow: Build, Evaluate & Deploy a RAG-based LLM App.

- Visual Studio Code: Use Azure, PromptFlow, GitHub Copilot, Jupyter Notebook extensions.

- Responsible AI: Content filtering, guidance for responsible prompts usage.