Let's Build Contoso Chat

About This Guide

This developer guide teaches you how to build, run, evaluate, deploy, and use, an LLM application with Retrieval Augmented Generation. We'll walk you through the end-to-end development process from prompt engineering to LLM Ops with step-by-step instructions all the way. Here's what you need to know:

- We'll build Contoso Chat, a customer service AI application for the Contoso Outdoors company website.

- We'll use Azure AI Studio and Prompt Flow to streamline LLMOps from ideation to operationalization!

What You'll Need

The main workshop takes about 60-75 minutes to complete. Significant time is taken by provisioning Azure resources and deploying your final promptflow-based LLM application. Some parts of the workshop may be automated or completed in parallel to reduce that time.

You will need:

- A GitHub account (with GitHub Codespaces access)

- An Azure account (with Azure OpenAI access)

- A modern browser (to run Codespaces, access Azure portals)

- Familiarity with Python, Jupyter Notebooks & VS Code

Instructor Led Sessions

This documentation is meant for self-guided completion of the workshop. The workshop may also be offered in instructor-led sessions at events like the 2024 Microsoft AI Tour using a Lab On Demand platform that comes with a pre-provisioned Azure subscription and built-in guide. Instructions should be comparable, but experience may vary.

What You'll Build

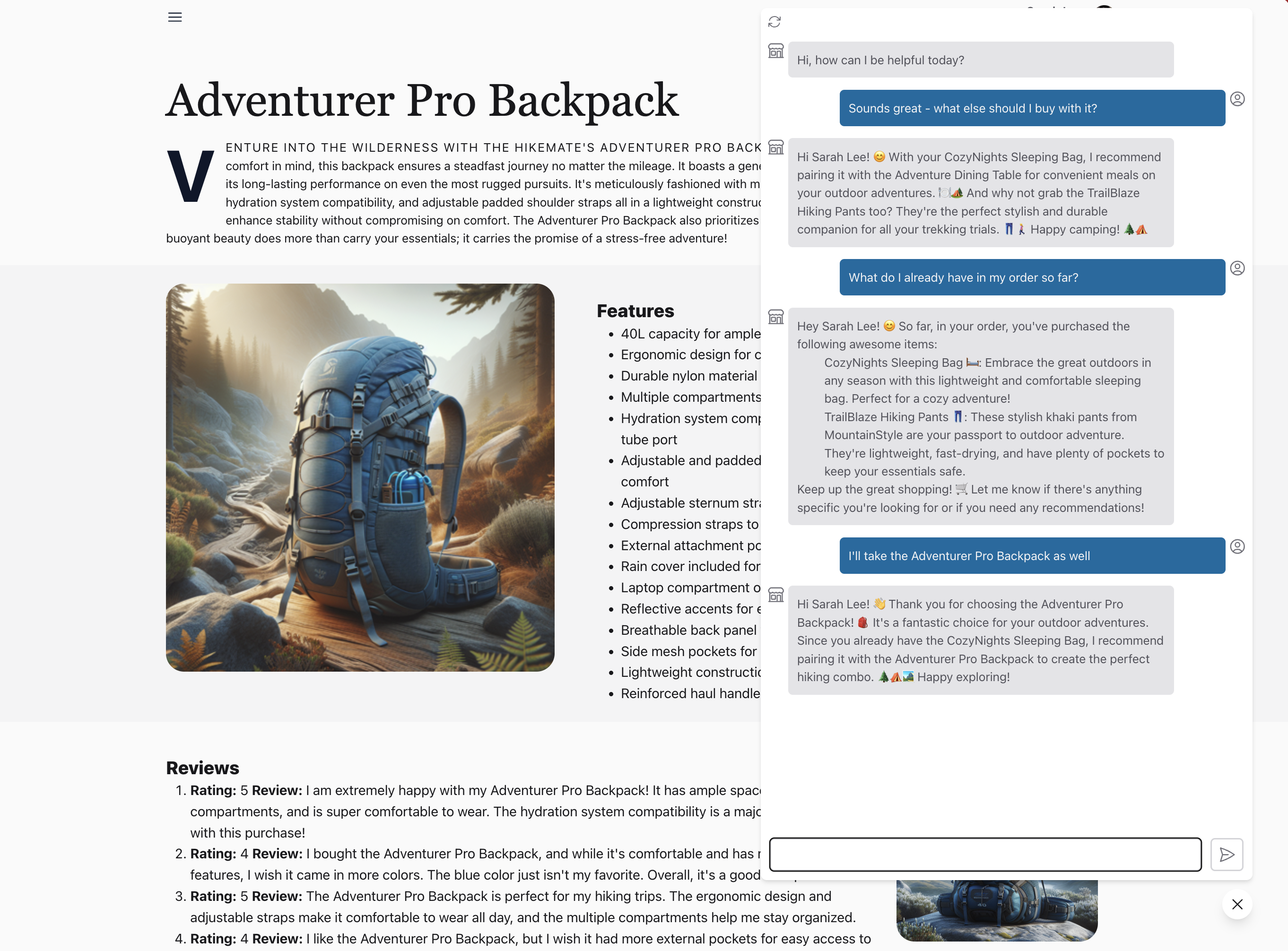

The main workshop focuses on building Contoso Chat, an AI application that uses Retrieval Augmented Generation to build a customer support chat agent for Contoso Outdoors, an online store for outdoor adventurers. The end goal is to integrate customer chat support into the website application for Contoso Outdoors, as shown below.

Azure-Samples Repositories

The workshop will refer to two different applications in the overview. The contoso-chat sample provides the basis for building our RAG-based LLM Application to implement the chat-completion AI. The contoso-web implements the Contoso Outdoors Web Application with an integrated chat interface that website visitors will use, to interact with our deployed AI application. We'll cover the details in the 01 | Introduction section of the guide.

How You'll Build It

The workshop is broadly organized into these steps, some of which may run in parallel.

- 1. Lab Overview

- 2. Setup Dev Environment (GitHub Codespaces)

- 3. Provision Azure (via Azure Portal, Azure AI Studio)

- 4. Configure VS Code (Azure Login, Populate Data)

- 5. Setup Promptflow (Local & Cloud Connections)

- 6. Run & Evaluate Flow (Locally on VS Code)

- 7. Deploy & Use Flow (Cloud, via Azure AI Studio)

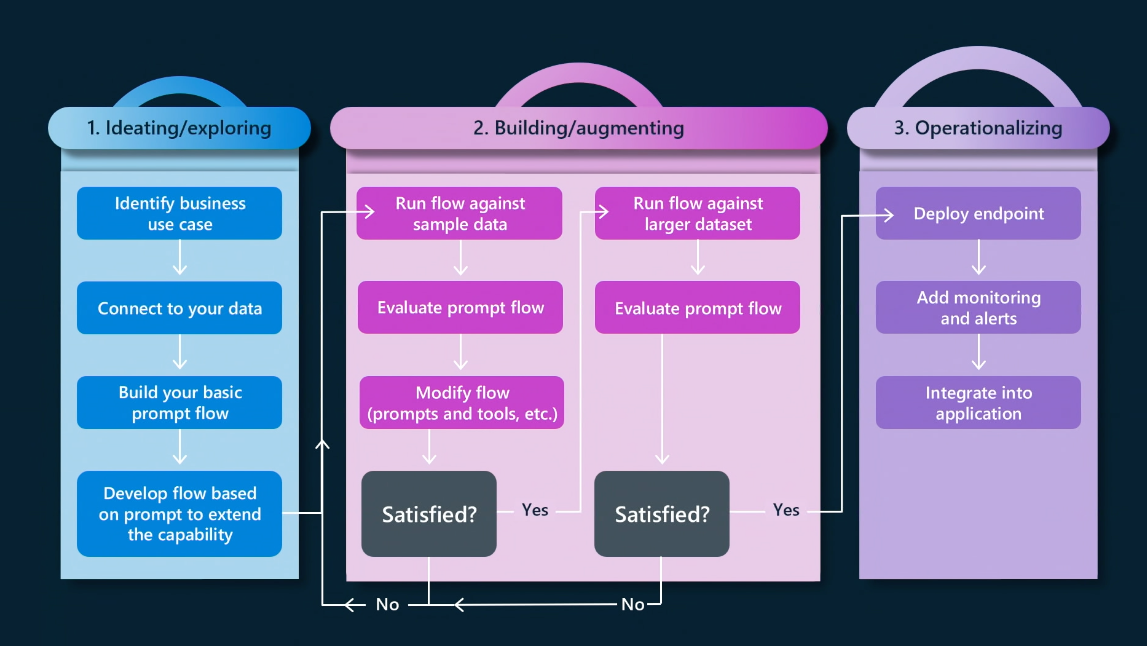

With this workshop, you get hands-on experience with the ideating/exploring and building/augmenting phases of the LLM App development lifecycle. The workshop ends with deploying the endpoint to kickstart the operationalizing phase of LLM Ops.

If time permits, continue operationalizing your app with the following Bonus Exercises:

- 8.1 | Contoso Website Chat (Integration)

- 8.2 | GitHub Actions Deploy (Automation)

- 8.3 | Intent-based Routing (Multi-Agent)

- 8.4 | Content Filtering (Responsible AI)

Get Started .. 🚀

- Want to understand the app dev lifecycle first? Start here 👉🏽 01 | Introduction.

- Want to jump straight into building the application? Start here 👉🏽 02 | Workshop.

- Want to learn more about LLM Ops? Watch this first 👇🏽 #MSIgnite 2023 Breakout

Breakout Session: End-to-End App Development: Prompt Engineering to LLM Ops

Abstract | Prompt engineering and LLMOps are pivotal in maximizing the capabilities of Language Models (LLMs) for specific business needs. This session offers a comprehensive guide to Azure AI's latest features that simplify the AI application development cycle. We'll walk you through the entire process—from prototyping and experimenting to evaluating and deploying your AI-powered apps. Learn how to streamline your AI workflows and harness the full potential of Generative AI with Azure AI Studio.