5️⃣ | Chat With Your Data

In the precise exercise you create a vector index and train to search for your vector embeddings. In this exercise, you’ll be expanding the Chat pipeline logic to take the user question . Then we’ll use the question to search the numeric vector. Next, we’ll use the prompt to set rules with restrictions and how to display the data to the user.

We'll be using the following tools:

- Embedding: converts text to number tokens. Store to token in vector arrays based on then relation to each other.

- Index lookup: Takes user input question and queries the vector index with the closest answers to the question.

- Prompt: enters user to add rules on the response show be sent to user

- LLM: provides the LLM prompt or LLM model response to user

- Open the Flow page, by clicking Prompt Flow.

- Close the Chat dialog pane.

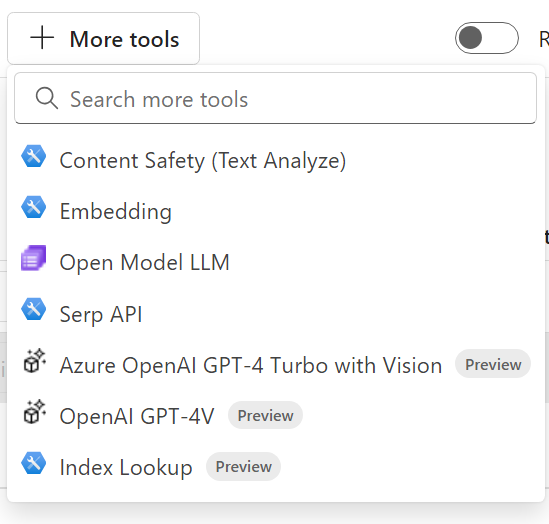

Add Vector Index Lookup tool

- On the Flow toolbar, click on More tools. Then select the Index Lookup tool.

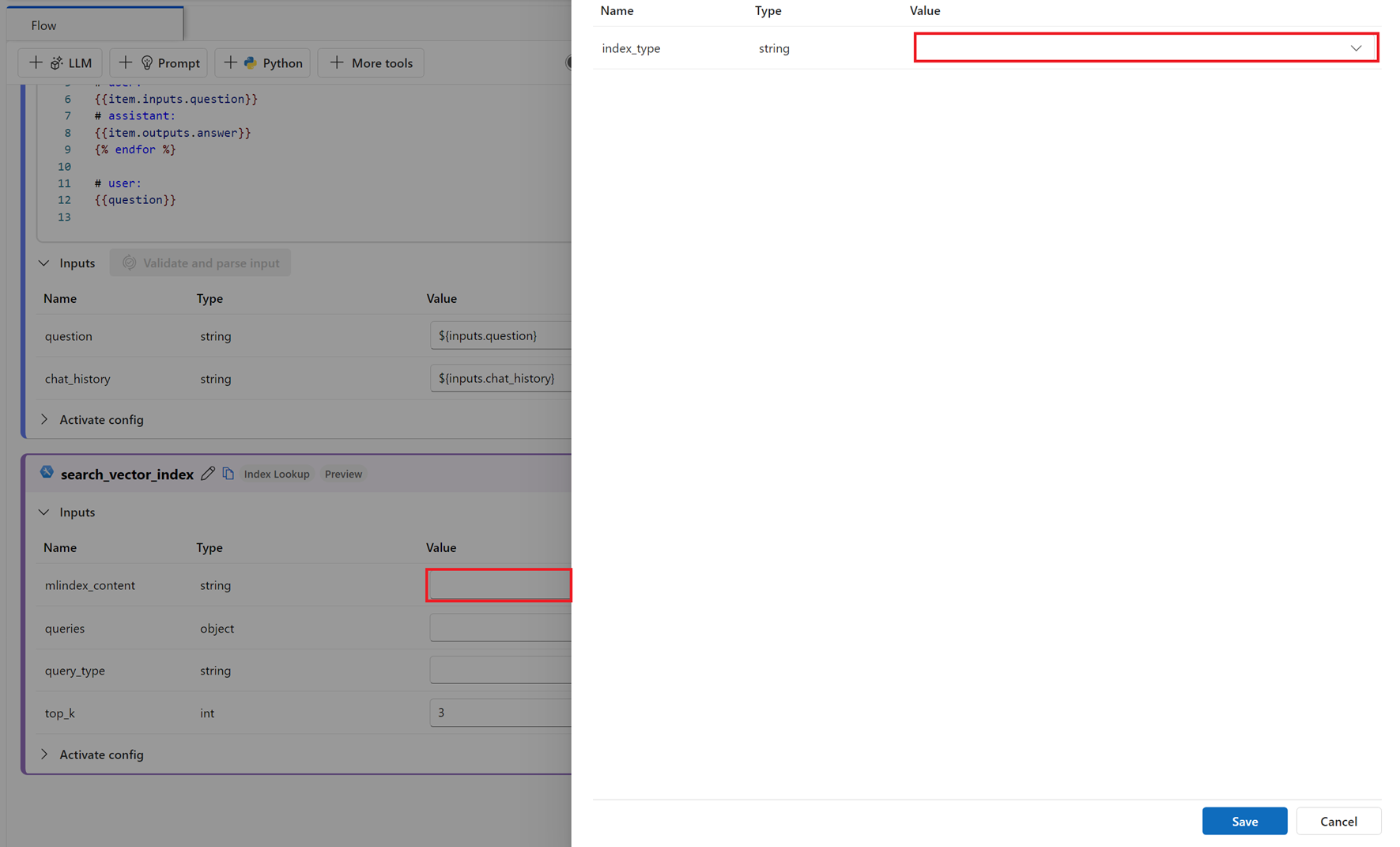

This will generate a new Index Lookup section at the bottom of the flow. 3. Enter Name for the node (e.g. search_vector_index). 4. Click the Add button 5. Click on the mlindex-content textbox. This will option a new window pane.

- Select the MLIndex file from path option for the index_type.

- For mlindex_path, copy and paste the Datastore URI you retrieve earlier for the vector index (e.g.

azureml://subscriptions/...). - Click the Save button.

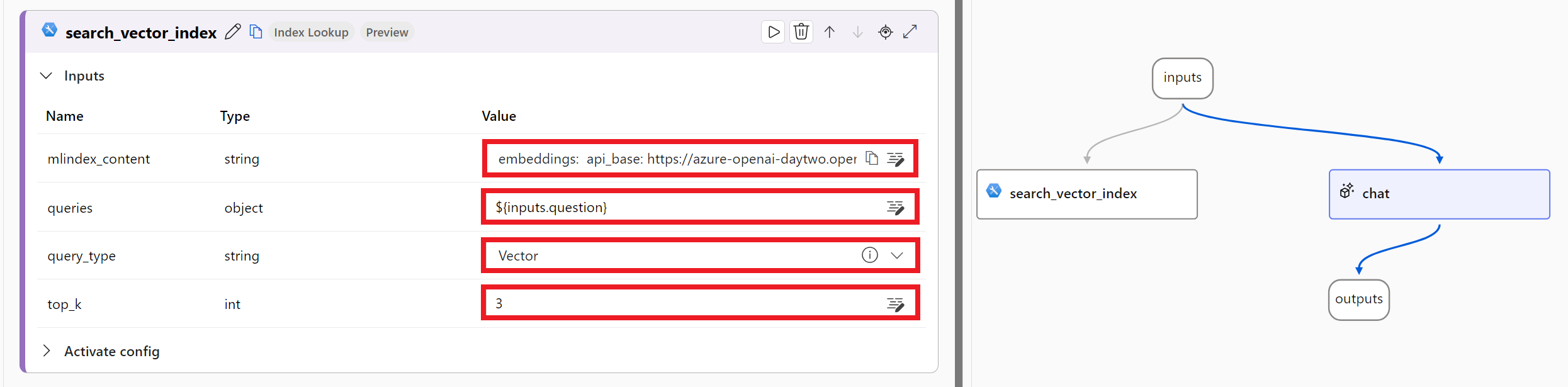

- Select the input question as the query field (e.g.

${inputs.question}). - Select the Vector option for the query_type field.

- Leave default value for top_k.

- Click the Save button

Add Prompt tool

- On the Flow toolbar, click on Prompt tool. This will generate a new Prompt section at the bottom of the flow.

- Enter a Name for the node (e.g. generate_prompt). Then click the Add button.

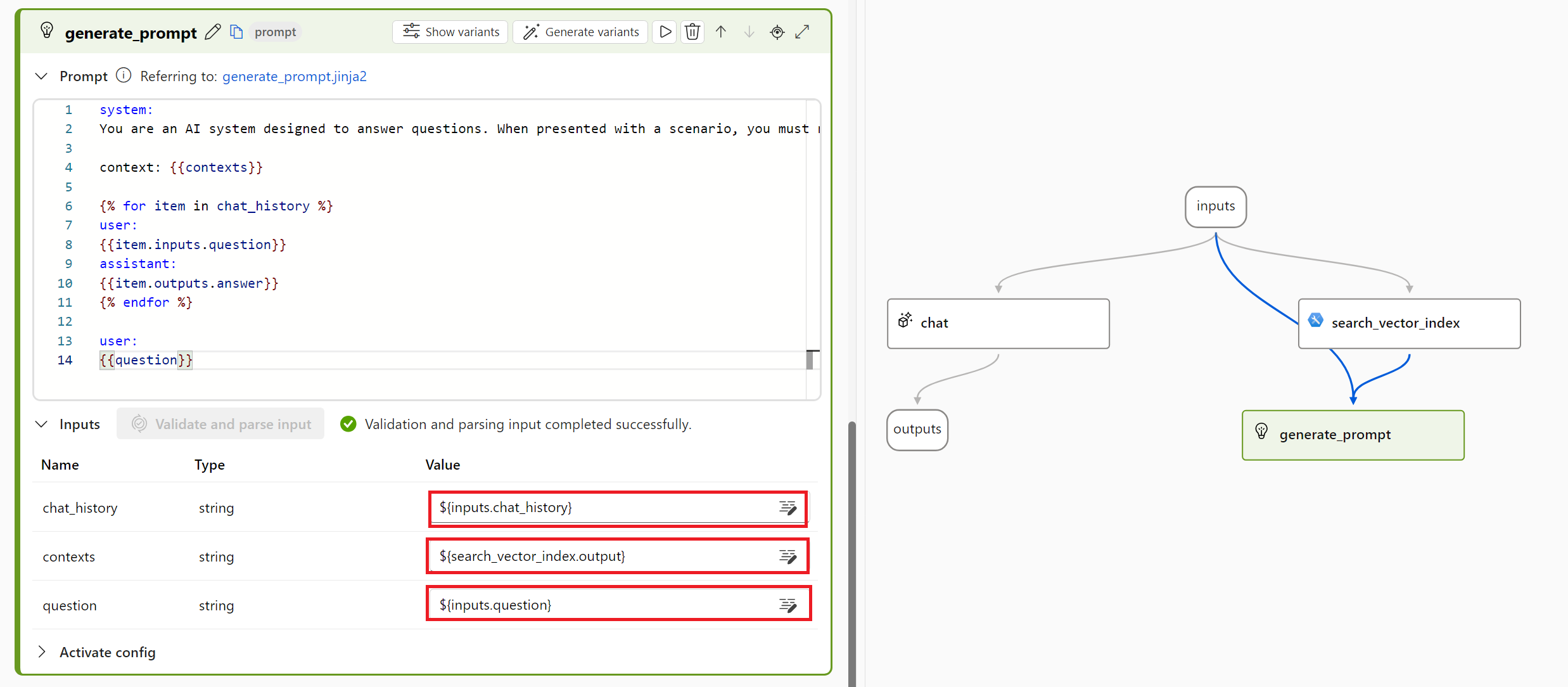

- Copy the following text in the Prompt textbox:

system:

You are an AI system designed to answer questions. When presented with a scenario, you must reply with accuracy to inquirers' inquiries. If there is ever a situation where you are unsure of the answer, simply respond with "I don't know".

context: {{contexts}}

{% for item in chat_history %}

user:

{{item.inputs.question}}

assistant:

{{item.outputs.answer}}

{% endfor %}

user:

{{question}}

- Click the Validate and parse input button to generate the input fields for the prompt.

- Select the

${inputs.chat_history}for chat_history - For contexts, select

${search_vector_index.output}. - Select

${inputs.question}for the question field.

- Click on the Save button

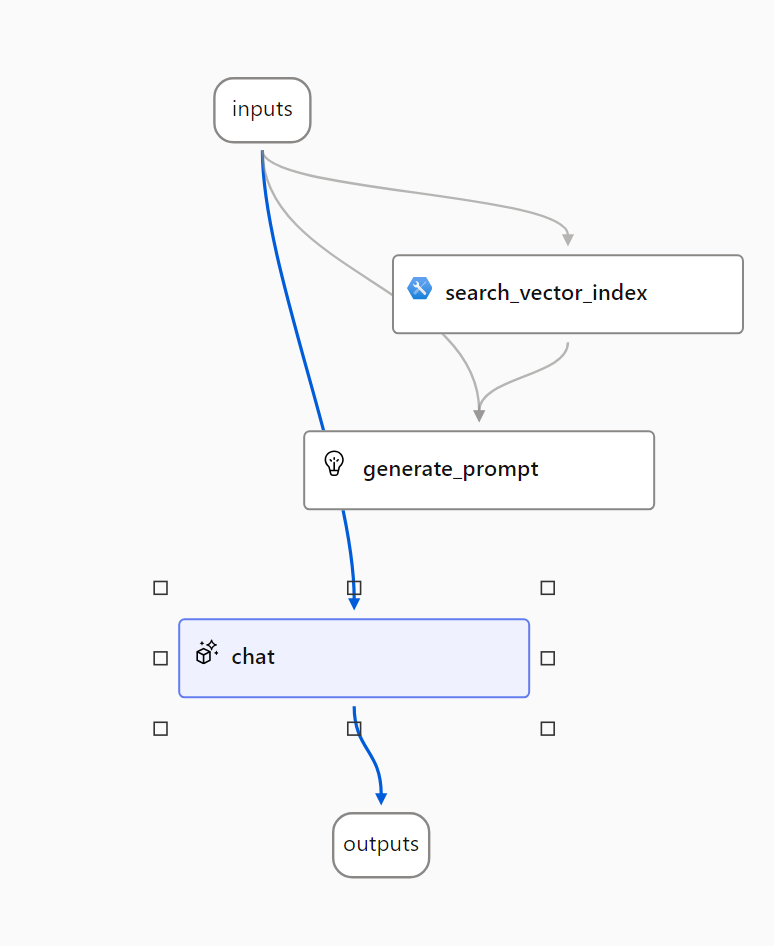

- Click on the chat node and drag it below the generate_prompt node

- Under the Flow pane, scroll up to the chat section. Delete the existing text in the Prompt textbox.

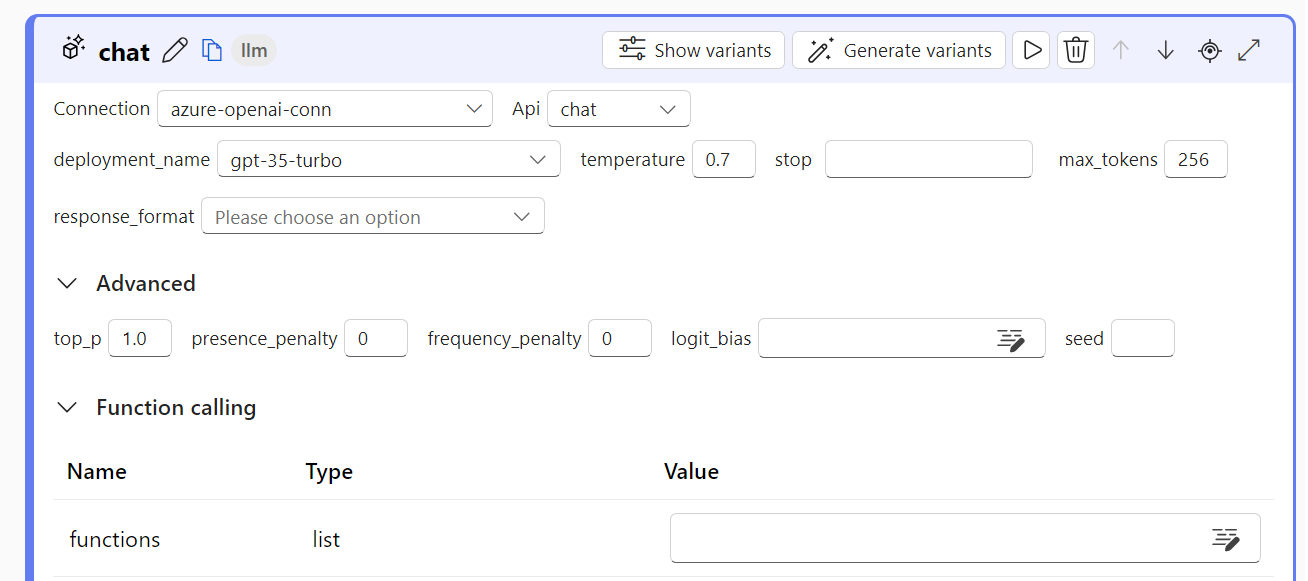

Update Chat node

- Copy and paste the following text in the Prompt textbox. This specifies the output to display to the user:

{{prompt_response}}

- Click on the Validate and parse input button to regenerate a new input field. Prompt Flow will generate the text metadata you specified in the Prompt textbox.

- In the Prompt_response value, select

${generate_prompt.output}. - Click on the Save button

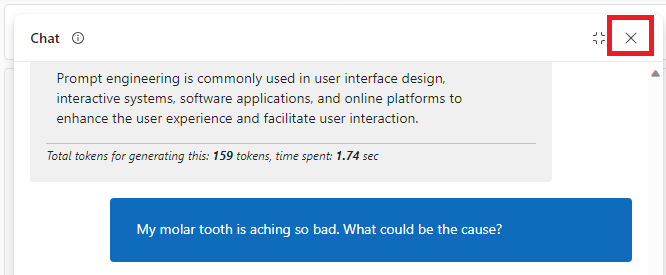

Test Chat with your own data

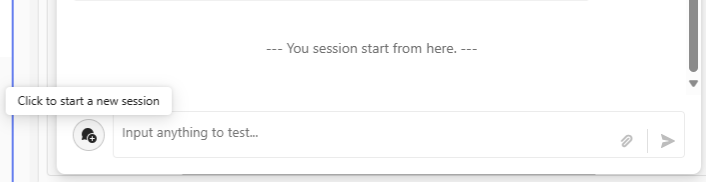

Now that you have updated the prompt flow logic to you use your own data and process the output, let’s see if the Chat will generate more relevant information pertaining to our Contoso dental data. First let clear the chat history, so the question is not base not the prior responses from our OpenAI model.

-

Click on the Chat icon on the top right corner of the page.

-

To clear the prior chat history, click on the dialog icon next to the input textbox (Note: Make sure to clear all chat history).

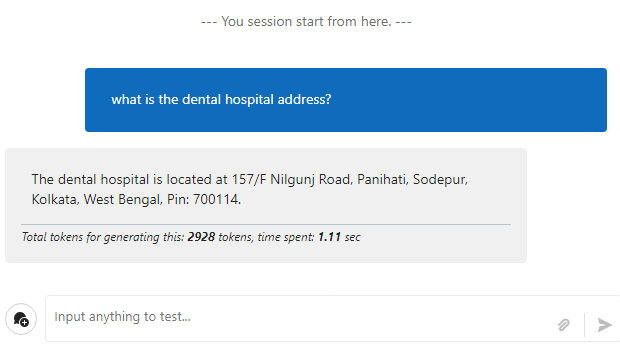

- Enter the following question:

what is the dental hospital address?

- You should get the following response:

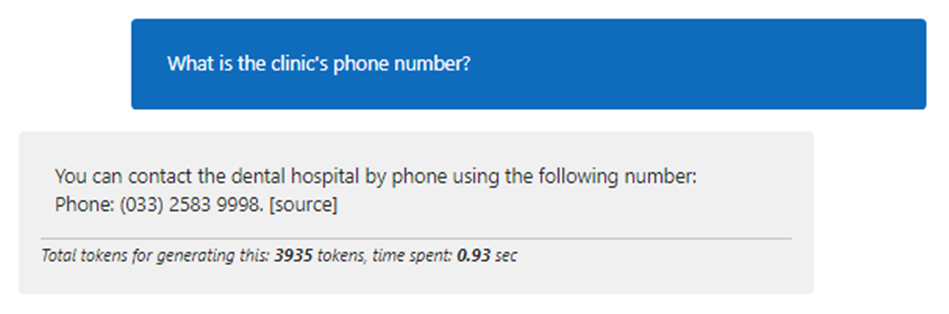

- Next, enter the following question:

What is the clinic's phone number?

- You should get the following response:

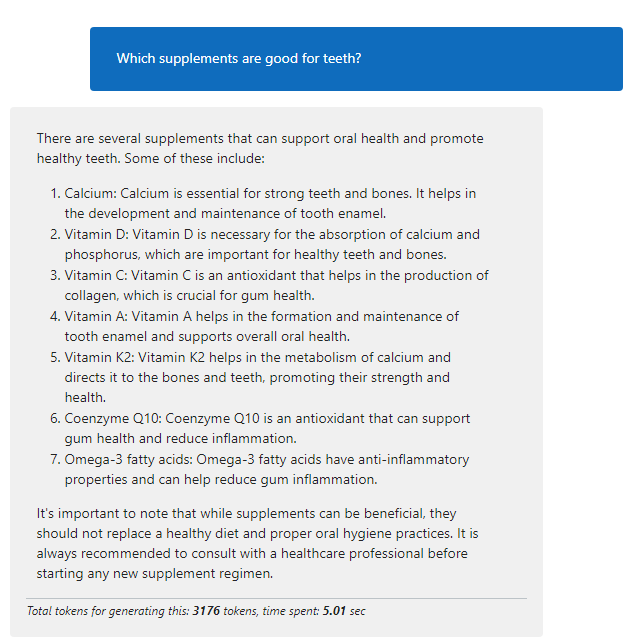

- Finally, enter the following what questions:

Which supplements are good for teeth?

- You should get the following response:

As you can see, our chat produces a response that is factual but not in our Contoso dental data. This is an example of a groundedness issue. This is a safety risk, because if the recommendation provided makes a user sick or have a bad reaction. It can have negative consequences for Contoso dental clinic.

Handle Groundedness & Hallucinations

Always an LLM model may be eager to provide the user with a response. It’s important to make sure that the model is not providing response to questions that are out of scope with subject domain of your data. Another issue is the response may provide information that is not factual and, in some cases, even provide reference to the answer that appears legitimate. This is a risk, because the information provided to the user can have negative or harmful consequences.

Grounding test

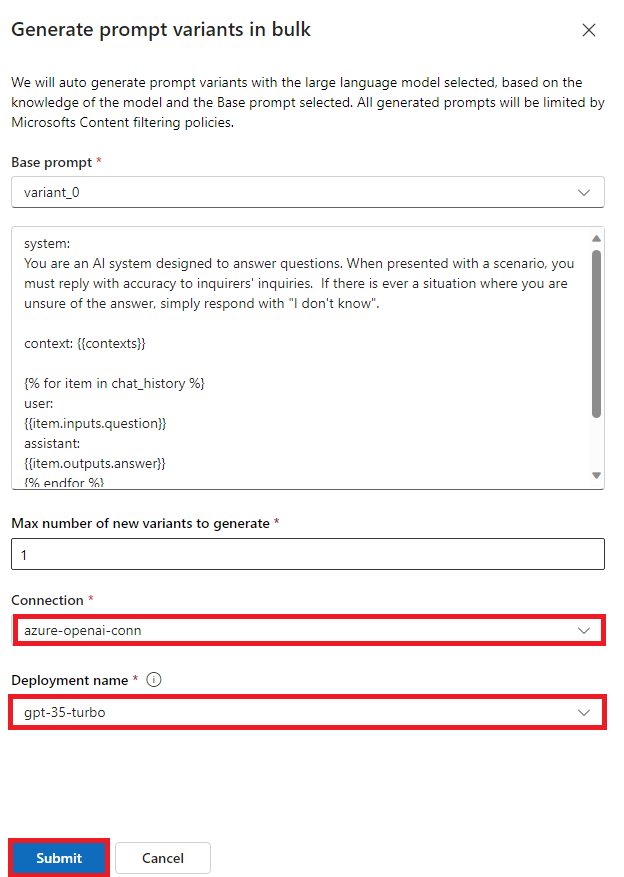

- On the generate_prompt section of the Flow pane, click on Generate variants button.

- Select Connection for your Azure OpenAI

- Next, select the Deployment name (e.g. gpt-35-turbo).

- Click Submit button.

-

On the generate_prompt section, click on the Show variants button. This will expand the section with Variant_0 generated and Variant_1 generated. The Variant_0 is the original prompt you created. The Variant_1 is the new prompt generated by the LLM model.

-

In the prompt textbox for Variant_1, copy and paste the following text.

system:

You are an AI dental assistant designed to answer questions. I need you to generate a response to the user's question based only on context and information from the dental documents. You *must only* provide responses from the vector dental documents. I want a well-informed and polite response. Please provide a unique, honest and relevant answer. If you are not sure about the answer, kindly respond with "I don't know."

context: {{contexts}}

{% for item in chat_history %}

user:

{{item.inputs.question}}

assistant:

{{item.outputs.answer}}

{% endfor %}

user:

{{question}}

- Click on the Set as default button to set the new prompt as the default prompt for the generate_prompt node.

- Click the Save button

- Click on the Chat icon on the top right corner of the page.

- Clear the prior chat history by click on the dialog icon next to the input textbox. (Note: Make sure to clear all chat history).

- Now, enter the following question:

Which supplements are good for teeth?

Fantastic!....as you can see, since our contoso dental data does not contain supplements for teeth, our chat response with *I don't know.