5.2 Refactor: Make it Better¶

1. Contoso Chat Sample¶

Once you've familiarized yourself with the basic setup, ideation and evaluation capabilities, you can explore the Contoso Chat sample for more advanced insights, on your own.

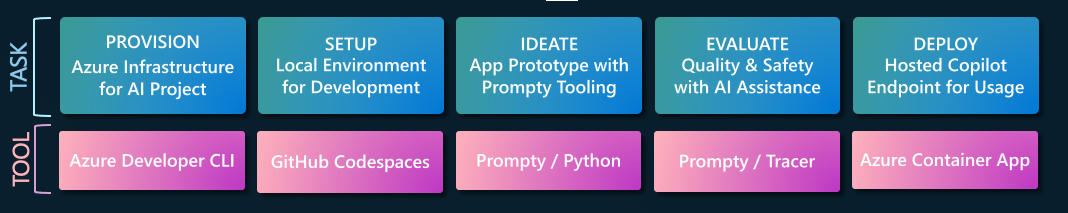

This sample uses the same retail dataset used in this RAG Chat workshop, but streamlines the end-to-end development workflow with core developer tools like Azure Developer CLI, to automate provisioning and deployment. The sample also teaches you how to package up your application prototype and deploy it into production using Azure Container Apps.

The Contoso Chat Workshop walks you through the end-to-end development journey from prompt to production, using an AI App template that can be provisioned and deployed with a single command (azd up). The sample also shows you how to use a FastAPI application server (to create an API endpoint for accepting requests to our chat AI) and how to package up the application as a Docker container that can be deployed to an Azure Container Apps endpoint for real-world interactions.

Contoso Chat is currently in v3. An updated v4 (Jan 2025) will reflect recent Azure AI SDK updates

2. RAG Chat Sample (v2)¶

Another option is to use this existing repository and workshop as a sandbox sample that you can customize to your own requirements. Here are some suggestions for ways to experiment further:

-

Bring Your Own Data - Walk through the steps but replace the

product.csvwith your data, and understand the implications for all the other steps in the workflow. For example - you will need to create a new set of evaluation test inputs (for your use case) and refactor the prompt templates (for intent mapping and chat-with-products) to reflect new instructions or context. -

Integrate More Sources - The basic sample has a single data source (product catalog index). However, the

data/folder provides additional data sources (customer data, product manual data) that can be used for even richer experiences. Think of how you can refactor the existing code to support data retrieval from multiple sources that store this data (e.g., Customer data in Azure Cosmos DB). -

Write Custom Evaluators - The default evaluators for quality reflect common best practices. However, you may want to assess responses for a custom criteria. Think of how you can write a custom evaluator, and add it into the evalution script. For example: evaluate responses for politeness or for being kid-friendly.

-

Explore Richer RAG Patterns - The core of RAG patterns is the retrieval of relevant knowledge from various sources including Azure AI Search. Explore new algorithms or ways of combining context to make the retrieved documents more relevant to application needs.

-

Make it Agentic - Agentic workflows are dominating the AI ecosystem today. Agentic RAG refers to the use of AI agents for information retrieval where the agent mediates between multiple data sources to drive enhanced RAG pipelines for delivery. Read more here.